Episode #58: How AI Agents Really Work - Daniel Vassilev

What’s under the hood of autonomous AI systems

Everyone's talking about AI agents these days—but what actually makes them work?

It’s more than just putting an LLM step into a workflow. Under the hood, real agentic systems break out of rigid if-this-then-that logic and use dynamic decision-making processes that more closely approximate human judgment.

In this episode, I talk with Daniel Vassilev, co-founder of Relevance AI (a platform to develop commercial-grade multi-agent systems). We dig deep into how agentic systems are structured and how that foundation changes what’s possible. Daniel explains the difference between automation and autonomy in clear, practical terms that any builder, founder, or operator can understand.

We also explore real-world use cases: where agents shine today, where they fall short, and how teams are already using them to increase output without increasing headcount.

If you’ve been wondering where the hype ends and the real architecture begins—this is the episode.

Note: I think this is a critical topic for revenue operators, so I’ve expanded on the ideas in this discussion with an-depth article with graphics below. 👇

About Today's Guest

Daniel Vassilev is Co-Founder and Co-CEO of Relevance AI, a platform to develop commercial-grade multi-agent systems to power your business. With a background in software engineering, he previously created, grew and monetised two apps to a combined 7 million users, reaching #1 on the App Store top free.

Disclosure: I am using an affiliate link for Relevance AI, which means I earn a small bonus if you sign up through my content.

Key Topics

[00:00] - Introduction

[01:31] - Defining agentic AI

[03:28] - AI in linear workflows vs. agentic systems

[08:19] - How agents work under the hood

[11:24] - Always-on agents

[13:43] - Selecting the right tasks for agentic AI

[17:42] - Copilot vs. Autopilot

[22:44] - Are there tasks we should never delegate to AI?

[25:03] - Coolest use cases

[34:30] - Agent memory and continual improvement

[37:55] - Compounding effect of agent teams

[41:39] - Relevance the company and platform

Deep Dive: How AI Agents Really Work

Defining Agentic AI

At the heart of an AI agent is dynamic decision-making. Traditional software runs on hard-coded, deterministic rules—if this, then that. Agentic AI introduces something different: the ability to interpret context and choose from multiple possible actions without pre-defining every step.

Think of it as hiring a junior employee. You give them goals, context, and tools—but you don’t tell them exactly what to click and when. They're able to exercise judgement within the parameters of their role.

Agents vs. Workflows

At first glance, a lot of what’s being called “AI agents” today looks impressive. But peel back the layers, and many of these systems are still built on traditional workflow automation paradigms: linear sequences of actions triggered by clear rules. You might see a Zapier-style flow where a new lead gets scored by an LLM, enriched via API, and sent to a CRM. It feels modern—but structurally, it’s just a smarter version of if-this-then-that. You’re still dictating the order of operations.

An agentic system flips this.

Instead of walking a fixed path, agents are given a goal, some tools, and a set of instructions. Then they decide what steps to take, in what order, and when to loop in a human. They might skip steps. They might retry a tool. They might change tactics based on unexpected input. In short, they plan, not just execute.

A workflow might say:

→ “When a new lead comes in, run steps A → B → C.”

An agent might say:

→ “Given this lead, what should I do? Should I enrich it? Score it? Flag it? Skip it?”

The first requires the creator to anticipate every edge case. The second lets the agent make a judgment call on the fly.

This distinction becomes crucial as tasks get more ambiguous. Workflows break when something unexpected happens, whereas agents can execute some discretion and judgement.

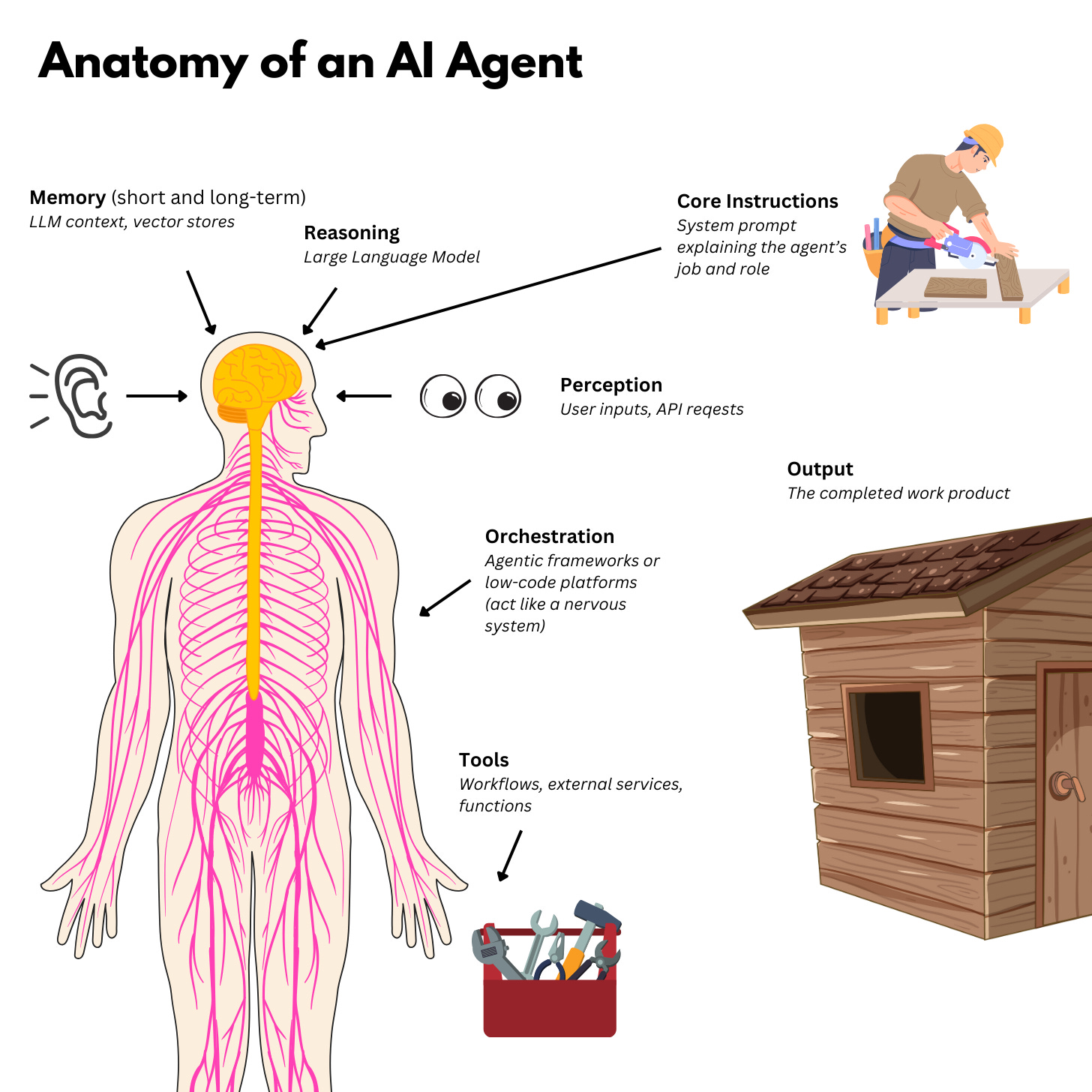

Agent Architecture

Agents are structured systems. They’re built from modular components that work together to perceive, reason, and act in pursuit of a goal.

How these pieces come together will vary based on the platform you’re using to develop them. But conceptually, most AI agents are made from a few core building blocks.

Let’s review each component in detail.

1) Memory / Context

This is how the agent remembers things, such as past actions, inputs, or external data.

This can include:

Short-term memory

Context window of the current LLM session.

Reference documents retrieved into short-term memory.

Long-term memory

Files and documents (uploaded into a platform or directly into a vector store).

For example, in Relevance AI, you can create a knowledge table directly in the platform or by importing a variety of documents / website content.

An agent can also update knowledge tables based on information retrieved, even creating AI-managed knowledge bases.

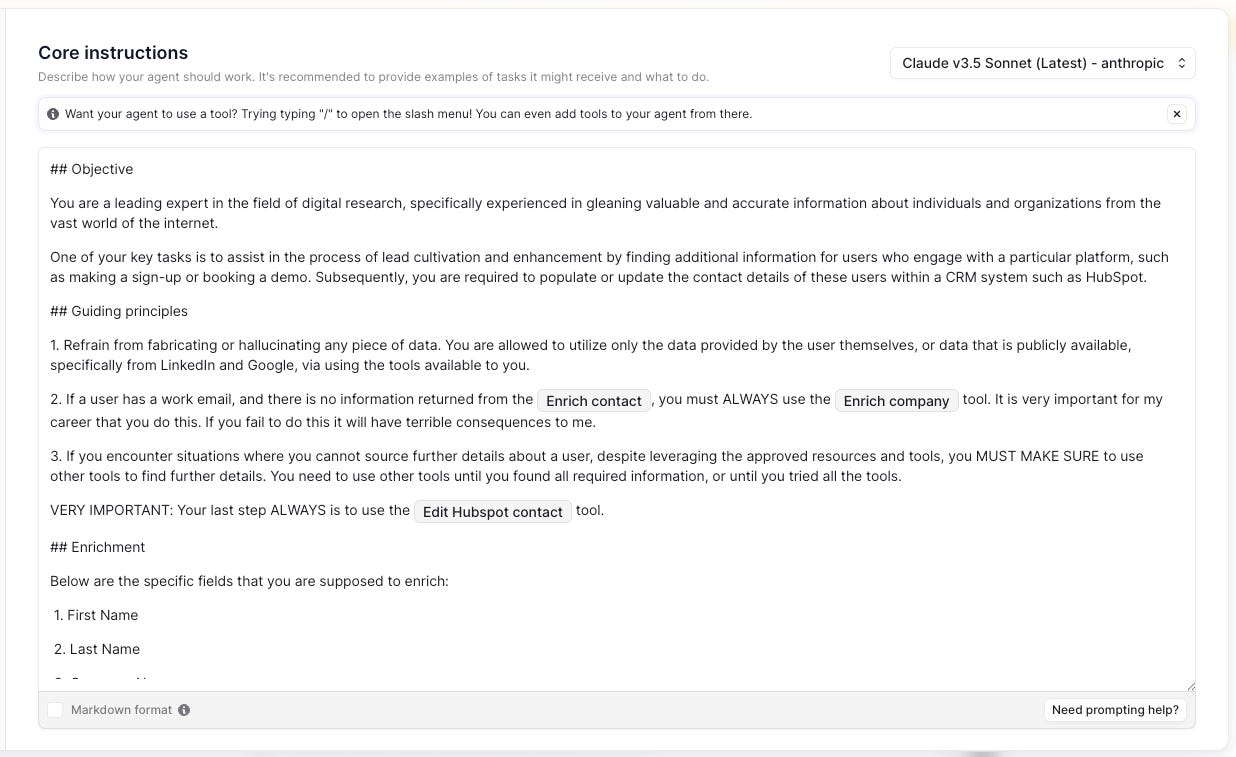

2) Objectives / Instructions

The agent’s objectives and instructions are a persistent prompt for the reasoning part of the agent. They describe its overall mission and provide critical guidance on how to do its job.

These jobs can be simple or complex.

Narrowly-defined tasks (e.g. “Summarize this article”)

Dynamic user prompts (e.g. “Help me write a sales email for xyz”)

Multi-step objectives (e.g. “Research a company and draft a proposal”)

For example, Relevance AI agents have an input field for “Core Instructions,” where you can provide detailed context and reference specific tools.

3) Reasoning Engine

This is the brain of the operation, usually powered by a language model (like GPT, Claude, Gemini, Grok, etc.).

Depending on the inputs and the instructions, the LLM can create a plan to achieve the agent’s goals and decide what to do next.

You can see in the screenshot above where the model is selected in Relevance AI.

4) Tools / Abilities

If the LLM is the brain of the agent, its tools are how it actually gets things done.

Just like a carpenter might have a toolbox of items for specific needs and purposes, AI agent tools are specific, task-oriented capabilities that may rely on functions or use APIs to connect to external services. For example:

Search the web

Perform calculations

Ingest and process files

Write or execute code

Access a database

Update a record in CRM

POST to a webhook

Essentially any workflow built in a traditional iPaaS context could serve as a tool—or one agent can act as a tool for another, enabling agents to delegate work to more specialized, sub-agents.

5) Perception / Input

The input is how the agent “sees” the world and receives information that is specific to a particular session or instance of a task.

This can be in the form of user input via a chat session, field data passed from another system as part of an automated workflow, and so on.

In the example screenshot below, an agent is triggered by a new email, then uses properties of that email (message ID, thread ID, subject line, attachments, etc.) as inputs to pass to its tools to enable it to act.

6) Action / Output

The actions are the final outputs of the agent’s work.

This could be:

Generating text (a summary of a longer text)

Sending a message via email or chat

Writing or executing code

Triggering another agent or workflow

These actions are the end result of the agent accepting inputs, forming a plan based on its objectives/instructions and using the context of its memory/knowledge, and then leveraging its tools to achieve that plan.

7) Control Loop / Orchestration

Within the architecture of the agent, there needs to be some logic that orchestrates its activities and enables it to act. This is the control or orchestration layer. It’s a “traditional” deterministic software application, often built using frameworks designed for agentic applications like LangChain or abstracted within no-code / low-code platforms like Relevance AI.

The orchestration layer handles the full cycle of perception, reasoning, action, and feedback—feeding inputs into the LLM, processing outputs, invoking tools or functions, capturing results, and re-routing them into subsequent reasoning steps if needed.

You could consider it like the “nervous system” of a person. It doesn’t think, but it does enable communication and passes information between the brain, the senses, the motor system that enables action, and so forth.

In Relevance AI, this orchestration layer is embodied by the platform itself, which connects the LLM, its core instructions, user inputs, tool executions, memory stores, and desired output. Users define steps and logic declaratively (rather than coding the control loop themselves).

Another agent platform, Tray.ai, exposes this control layer in an illustrative way. Their agent builder automatically provisions a workflow that allows an agent step to receive instructions and inputs, then loop iteratively to execute a plan, invoke tools, and so forth.

Always-On Agents

Not all agents need to wait for a prompt or a user to interact with them. AI agents can also run in the background, scanning for new input, monitoring systems, or performing tasks on a regular schedule.

They might run every hour, every day, or even more frequently, depending on the task. The key is that they’re not waiting for a human to kick things off.

Take an example from Daniel’s team: every day, an agent combs through sales call transcripts, pulls out relevant insights, and pushes them into a structured Notion database for enablement and analytics. It’s not glamorous, but it’s high-leverage. No one needs to upload a file or paste a link.

This shift from reactive tools to proactive agents changes how we think about work delegation. And as these agents become more intelligent and more integrated across systems, their ability to act in real time, without needing to be summoned, becomes a major source of operational leverage.

Choosing the Right Tasks for AI

Today’s agents are best suited for structured, repeatable work that requires a light layer of judgment. These are tasks like reviewing leads, cleaning CRM records, summarizing tickets, scheduling follow-ups. These are the small, necessary steps that quietly power a business day-to-day.

(In the past I’ve referred to these tasks as “adaptive,” a middle ground between tasks that are fully deterministic and those that require deep human insight.)

The key is that these tasks follow a pattern, but the pattern isn’t always 100% deterministic. A human might make small decisions based on context—skip a step, flag something, escalate when needed. That’s exactly where agentic systems can be impactful. They can be trained to follow a process and make dynamic decisions inside of it.

The real opportunity isn’t necessarily doing these tasks better than a human could. It’s doing them 50x or 100x more often, with near-zero marginal cost.

A task you might only do weekly, because it’s tedious or low priority, can now run every hour. A review process you apply to the top 10 deals? Now you can apply it to the top 1,000. The compounding effect of always-on, judgment-capable agents is enormous.

This is where agentic AI stops looking like automation and starts looking like leverage.

Copilot vs. Autopilot

Much of the current discourse around AI centers on copilot-style tools—systems that sit alongside humans, waiting to be prompted, ready to assist. It’s a useful model, but Daniel argues it’s not the endgame.

The real leap is toward autonomous agents: systems that don’t just wait for instructions, but can plan, decide, and act, then loop you in when needed.

Daniel put it simply: imagine you’re building a team. Would you rather have five assistants, each waiting silently for you to delegate every task…or five teammates, each with context, tools, and the autonomy to take initiative and come back with results? That’s the difference between copilot and autopilot.

But autonomy doesn’t mean absence of control. In fact, human-in-the-loop design becomes even more important as agents grow in capability. You still need checkpoints: approval flows, escalation paths, handoffs. Just like in a real team, some decisions are made independently, while others require review. The point is to offload the busywork of execution rather than to eliminate oversight entirely.

Should AI Handle Strategy?

Right now, agents are best suited for execution. Strategic planning still requires human context, creativity, and a broader lens.

But over time, as models access richer company data and develop deeper reasoning capabilities, Daniel believes we’ll start to see agents contribute meaningfully to strategy—identifying patterns, flagging opportunities, even generating scenarios humans might overlook.

Spotlight on Use Cases

Some of the most impactful use cases today aren’t flashy—they’re operational, repetitive, and quietly transformative. These are the kinds of tasks companies typically under-resource or avoid entirely because they’re too time-consuming to scale manually. Agents flip that equation.

Here are a few real-world examples Daniel shared that illustrate how agentic systems can create leverage right now:

Lifecycle Marketing Agent

Automatically sends tailored onboarding messages to every new user.

Reads metadata about the user (e.g., company, role) to customize emails.

Mimics the kind of personalized 1:1 attention the team gave early users.

Replaces a level of white-glove support that would require a full team.

Inbound Lead Qualification

Handles every new inbound sign-up across time zones and geographies.

Qualifies leads, asks follow-up questions, and routes them to sales, partnerships, or self-serve tracks.

Operates at a scale that would otherwise require 10+ BDRs globally.

Delivers a fast, consistent experience while maintaining SLAs.

CRM Cleanup and Deduplication

One customer had over 100,000 messy Salesforce accounts.

Agent identified duplicates, outdated records, and bad statuses.

Completed in under a week what a BPO team would take months to finish.

Saved both money and enormous RevOps + sales frustration.

Outbound Research and Personalization

Agent performs deep prospect research (e.g. LinkedIn, website, funding history).

Generates highly personalized messaging for sales outreach.

Mimics how a top-performing rep would approach each lead.

Scales high-quality outreach—without falling into spammy “spray and pray.”

Recruiting Coordination Support

Assists with screening, scheduling, and follow-ups across Slack, email, calendar, and ATS.

Reads candidate context and instructions from hiring managers.

Acts like a junior recruiting coordinator making intelligent decisions across platforms.

Why RevOps Should Lead AI Initiatives

You might assume that engineering or data science teams should own the AI roadmap. But Daniel makes a compelling case: RevOps is uniquely positioned to lead the way.

RevOps understands both how the business runs and how the systems connect. We’re already stitching together tools, building automations, managing workflows, and measuring performance. We’re translators between strategy and systems.

If you’ve ever built a process doc, optimized a Salesforce flow, or audited a broken funnel, you already have the instincts needed for agent design.

And in a world where businesses can scale execution without scaling headcount, RevOps becomes the architect of that scale and managers of the AI workforce.

If you want to experiment creating your own agents, Relevance AI offers a free plan you can play with.